This page is still a work in progress and will be updated

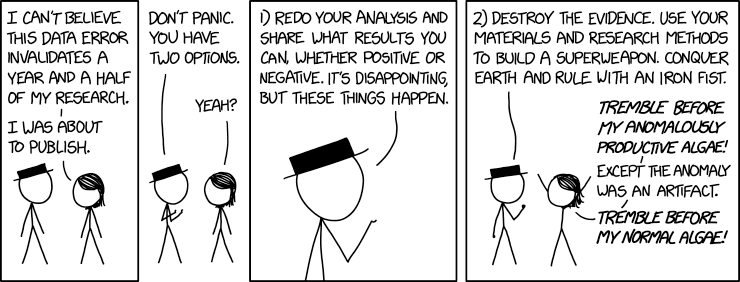

Datalad is a python framework to organise datasets and provide reproducibility. Such that we have less incidences like

and have to opt to destroy all evidence.

The basic idea of it is not much different from a git-repository.

Datalad even uses git and git-annex under the hood. However, it cleans up the user experience of both a little as well as providing some custom features that make it easier to work.

The complete documentation, with tutorials can be found on the handbook page of Datalad.

Be sure to take that into consideration, before starting to work with Datalad on PALMA.

Datalad is installed on PALMA via EasyBuild. You can load it at the moment only in the palma/2022b software stack via

module load palma/2022b module load GCCcore/12.2.0 module load datalad/0.18.4

There are a few things to notice about using Datalad on the cluster.

Running jobs with Datalad

First: As mentioned in the HPC section of the Datalad Handbook, the command datalad run is not working well with parallel jobs.

This means that there are two options: Either, we do not track the actual process with datalad, making our research less reconstructable or we need to take care of this in another way.

Currently, the basic suggested workflow is the following:

- We open a new datalad repository (aka a Dataset), which hold all input data needed for the calculations on the cluster (let's call this the "input-dataset"). We will be able to edit this at a later stage, however the basic structure of the dataset should be present.

If you have already worked with git or other versioning tools, you might be aware that large shifts in the data structure of your project is not the best thing to happen. - Two siblings of the Dataset are constructed that will be used as different outputs for our calculations:

- A simple sibling, that we will use later to track the calculations implemented on our Dataset.

- A storage sibling, e.g. via a RIA store. This sibling will be used in our workflow just to dump data from the jobs completed by SLURM.

- We run all calculations described in the input dataset via SLURM. For each calculation, we clone the dataset into a temporary file structure, do our calculations there, save the calculations to the outputs and destroy the temporary dataset that we created.

- After all jobs are completed, we gather all data back from our storage sibling, thereby linking them directly to the actions that caused them.

This workflow is based on this article and has a few advantages over the original workflow suggested in the Datalad handbook. In particular, it keeps the cost of cloning the dataset to a minimum, as we do not need to clone the output data, but instead only the input-dataset.

Now, we would like to implement this workflow on PALMA. Suggested is to make the input-dataset available in the home directory and the two output directories on scratch.

This can be done with the following commands:

# create input repo datalad create <nameofrepository> cd $WORK # create first output repo datalad clone <path/to/repository> ./<nameofrepository>_sink cd $HOME/<nameofreprotitory> # make sure the input repo knows about the output datalad siblings add -s sink $WORK/<nameofrepository>_sink # create ria-store sibling to push the data to datalad create-sibling-ria -s ria --new-store-ok --alias <nameofrepository> --shred group --group <mymaingroup> -R 0 ria+file:$WORK/my_ria

Keep in mind that this is only a suggested configuration and it is up to you how to implement it for your workflow.

Now you can fill the input repo with the data needed for your calculations. With datalad, it is possible to use submodules, as in git. Therefore, if you have large datasets that are needed for input, it is suggested to use a subdataset, as you do not need to copy large amounts of data to the home-directory in this case. Otherwise, you can also use the scratch directory for the whole setup.

Lastly, we need to take casre of the slurm scripts and make a few changes to incorporate datalad in the jobs on PALMA:

Generally, the submit-script will have the following structure:

SBATCH options setup working directory setup separate datalad dataset run script push results to output datasets

Example:

#!/bin/bash

##### SETUP SLURM OPTIONS AS USUAL

#SBATCH --nodes=...

#SBATCH --ntasks=...

#SBATCH --partition=...

#SBATCH --time=...

#SBATCH --mail-type=...

#SBATCH --mail-user=...

#SBATCH --job-name=...

#SBATCH -o ...

...

#####SBATCH -d singleton

# pick source repo and temporary working directory

MYSOURCE=<datalad repository>

WDIR=/mnt/beeond

source ${WDIR}/envs/intel.env

# clone the datalad dataset into the working directory

cd ${WDIR}

# flock is used here for the case, that two jobs start at the same time

flock ${MYSOURCE}.lock datalad clone ${MYSOURCE} ds_${SLURM_JOB_ID}_${SLURM_ARRAY_TASK_ID}

cd ds_${SLURM_JOB_ID}_${SLURM_ARRAY_TASK_ID}

# prepare dataset for the job, checkout into new branch

git annex dead here

git checkout -b job_${SLURM_JOB_ID}_${SLURM_ARRAY_TASK_ID}

# set up output directory

outdir=...

mkdir -p ${outdir}

# run the actual job

datalad run -i "specify one input here" -i "and the next one here" -o "same for outputs" "<PROGRAM GOES HERE>"

# if your job consists out of multiple programs, your should consider placing them in seperate datalad run commands.

flock ${MYSOURCE} datalad push --to origin

In the case above, we are using /mnt/beeond as our teporary file system. It has been seen, that this may not be stable. You can, if you are working on only one node, use /tmp instead. If beeond does not work for you and you are using multiple nodes, you can also make your own temporary directory. In that case, you need to take care of dropping the data at the end of the script yourself. This is most easily done with

datalad drop *

cd ..

rm -rf ds_${SLURM_JOB_ID}_${SLURM_ARRAY_TASK_ID}

at the end of the script.

When specifying the inputs, and especially the outputs, please specify all files needed, but not anything else.

Be careful with wildcards, like *!

After this, we have the original repository with a bunch of branches that are ordered by the name we gave them in the previous script. Now we will make a "collect_job" Script, which looks a little like this:

#/bin/bash

JOB_ID=${1}

# Merge all branches into the master branch

git merge -m "Merge results from job ${JOB_ID}" $(git branch -l | grep "job_${JOB_ID}" | tr -d ' ')

# Delete the branches

git branch -d $(git branch -l | grep "job_${JOB_ID}" | tr -d ' ')

This script is to be executed with the Job id as a parameter.