The batch or job scheduling system on PALMA-II is called SLURM. If you are used to PBS/Maui and want to switch to SLURM, this document might help you. The job scheduler is used to start and manage computations on the cluster but also to distribute resources among all users depending on their needs. Computation jobs (but also interactive sessions) can be submitted to different queues (or partitions in the slurm language), which have different purposes:

Partitions

Available for everyone:

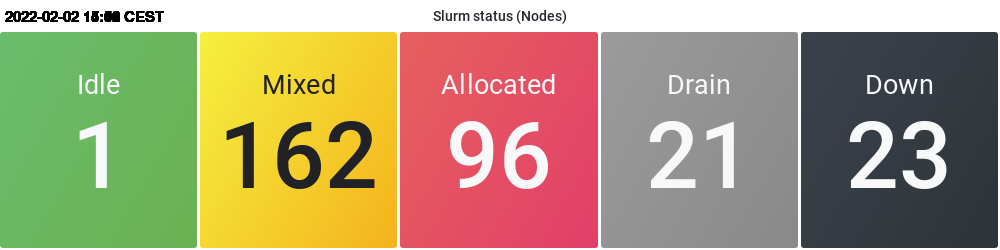

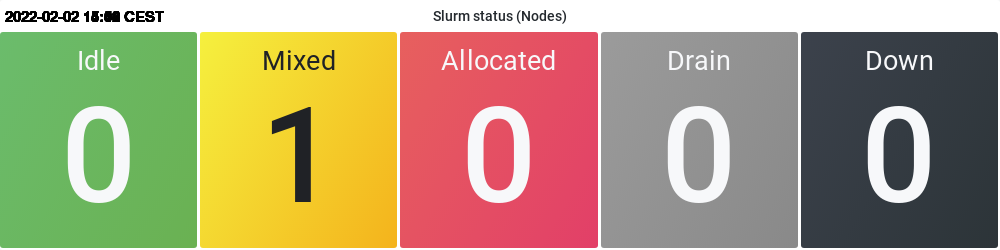

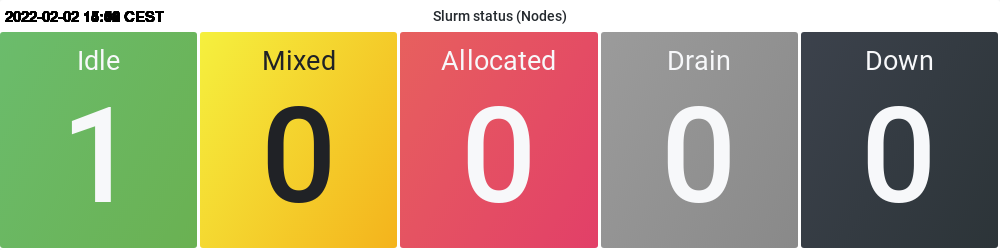

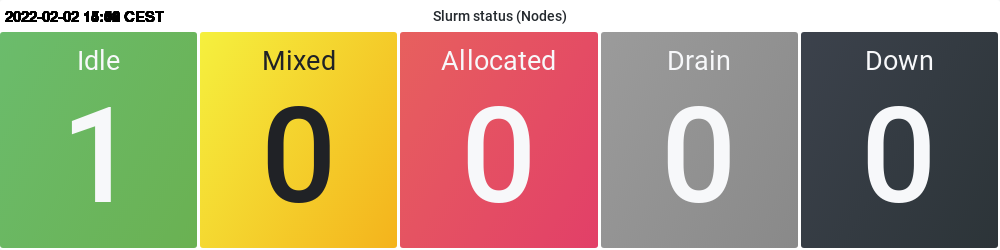

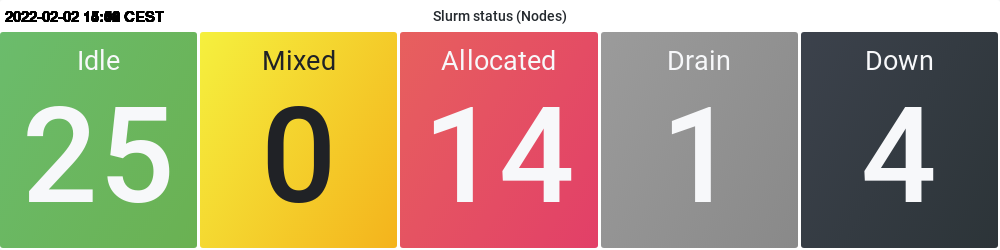

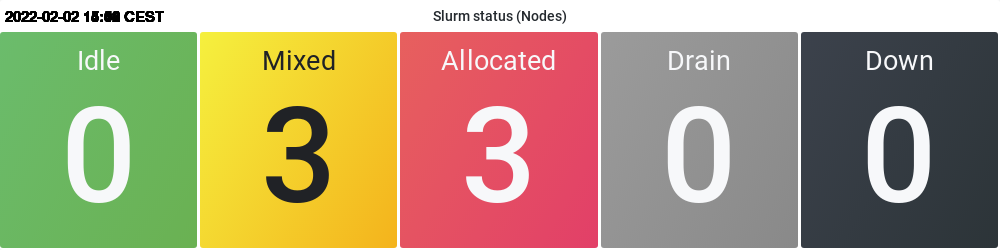

| Name | Purpose | CPU Arch | # Nodes | # GPUs | max. CPUs (threads) / node | max. Mem / node | max. Walltime | Slurm Status |

|---|---|---|---|---|---|---|---|---|

| normal | general computations | Skylake (Gold 5118) | 143 160 | - | 36 (72) | 92 GB 192 GB | 7 days | |

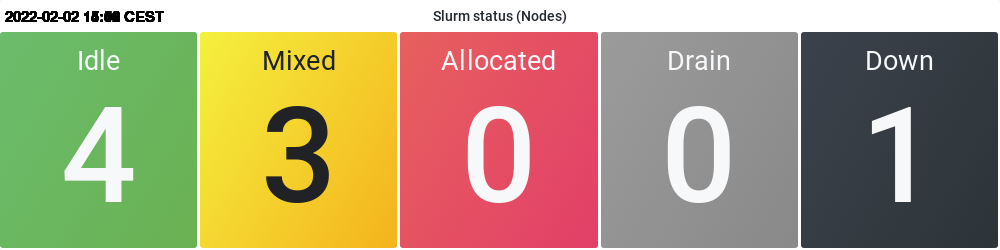

| express | short running (test) jobs | Skylake (Gold 5118) | 5 | - | 36 (72) | 92 GB | 2 hours | |

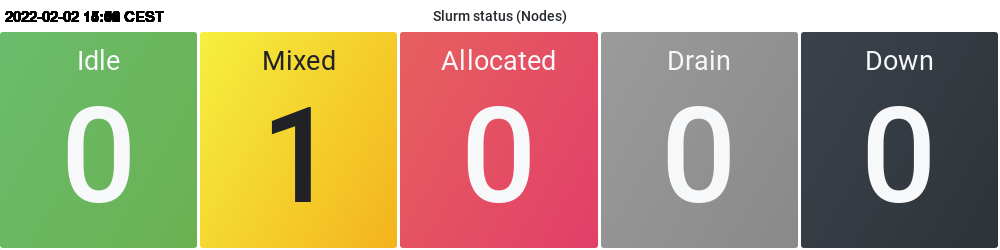

| bigsmp | SMP | Skylake (Gold 5118) | 3 | - | 72 (144) | 1.5 TB | 7 days | |

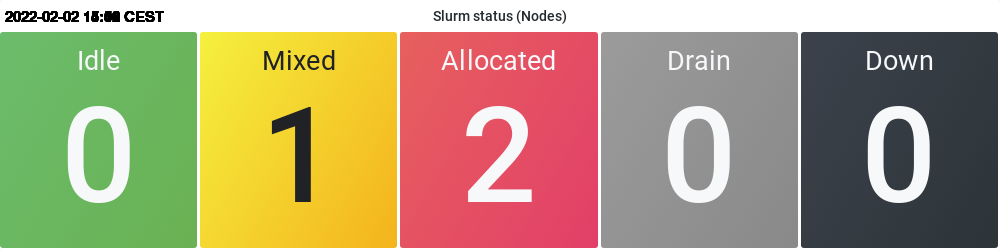

| largesmp | SMP | Skylake (Gold 5118) | 2 | - | 72 (144) | 3 TB | 7 days | |

| requeue* | This queue will use the free nodes from | Skylake (Gold 5118) | 68 50 3 | - | 36 (72) 36 (72) 72 (144) | 92 GB 192 GB 1.5 TB | 24 hours | |

| gpuv100 | Nvidia V100 GPUs | Skylake (Gold 5118) | 1 | 4 | 24 | 192 GB | 2 days | |

| vis-gpu | Nvidia Titan XP | Skylake (Gold 5118) | 1 | 8 | 24 | 192 GB | 2 days | |

| vis | Visualization / GUIs | Skylake (Gold 5118) | 1 | - | 36 (72) | 92 GB | 2 hours | |

| broadwell | Legacy Broadwell CPUs | Broadwell | 44 | - | 32 (64) | 118 GB | 7 days | |

| gpuk20 | Nvidia K20 GPUs | Ivybridge (E5-2640 v2) | 4 | 3 | 16 (32) | 125 GB | 2 days | |

| gputitanrtx | Nvidia Titan RTX | Zen3 | 1 | 4 | 32(32) | 240 GB | 2 days | |

| gpu2080 | GeForce RTX 2080 Ti | Ivybridge (E5-2695 v2) | 12 | 4 | 24 (48) | 125 GB | 2 days | |

| knl | Legacy Knightslanding CPUs | KNL | 4 | 64 (256) | 188 GB | 7 days |

requeue*

If your jobs are running on one of the requeue nodes while they are requested by one of the exclusive group partitions, your job will be terminated and resubmitted, so use with care!

Group exclusive:

| Name | # Nodes | max. CPUs (threads) / node | max. Mem / node | max. Walltime |

|---|---|---|---|---|

| p0fuchs | 9 | 36 (72) | 92 GB | 7 days |

| p0kulesz | 6 3 | 36 (72) | 92 GB 192 GB | 7 days |

| p0kapp | 1 | 36 (72) | 92 GB | 7 days |

| p0klasen | 1 1 | 36 (72) | 92 GB 192 GB | 7 days |

| hims | 25 1 | 36 (72) | 92 GB 192 GB | 7 days |

| d0ow | 1 | 36 (72) | 92 GB | 7 days |

| q0heuer | 15 | 36 (72) | 92 GB | 7 days |

| e0mi | 2 | 36 (72) | 192 GB | 7 days |

| e0bm | 1 | 36 (72) | 192 GB | 7 days |

| p0rohlfi | 7 8 | 36 (72) | 92 GB 192 GB | 7 days |

| SFB858 | 3 | 72 (144) | 1.5 TB | 21 days |